Early work in Data Science for/in/about SE

2025-07-29

Early Days

“Computers”

Still from the excellent movie ‘Hidden Figures’

Era of Hubris: mainframes and the software to run them

The Mythical Man-Month and Peopleware

- “adding more people to a late project makes it later” (Brooks)

- “the logical structure of a system reflects the organization that built it” (Conway’s Law)

- one cannot simply … engineer complex socio-technical systems

The Role of Data

- cannot manage what you cannot measure?

- Goodhart’s law: “When a measure becomes a target, it ceases to be a good measure”.

- lines of code == productivity

- But surely something is worth measuring?

- “Who writes code faster” (10x)

- “How long is this compile cycle”

- “What file is worth fixing?”

Exercise: Team metrics

QR Code

sonar cloud qr code

Steps

Visit https://sonarcloud.io/summary/new_code?id=mediawiki-core and explore the data science dashboard there.

- Q. what is the part of the code that needs attention? How do you know this?

- Q. what do you make of “issues”? Where do you start with a remediation project?

TPS

- think about the following question: “You are the software engineering manager or team lead at a big company. What is one key question you want to know the answer to for effectively running your team”.

- Write down a quick answer you have - maybe from your past experience.

- Then pair with your table partner to share and discuss their answer and yours. We will then collect a few responses from the class as a whole.

TPS Menti

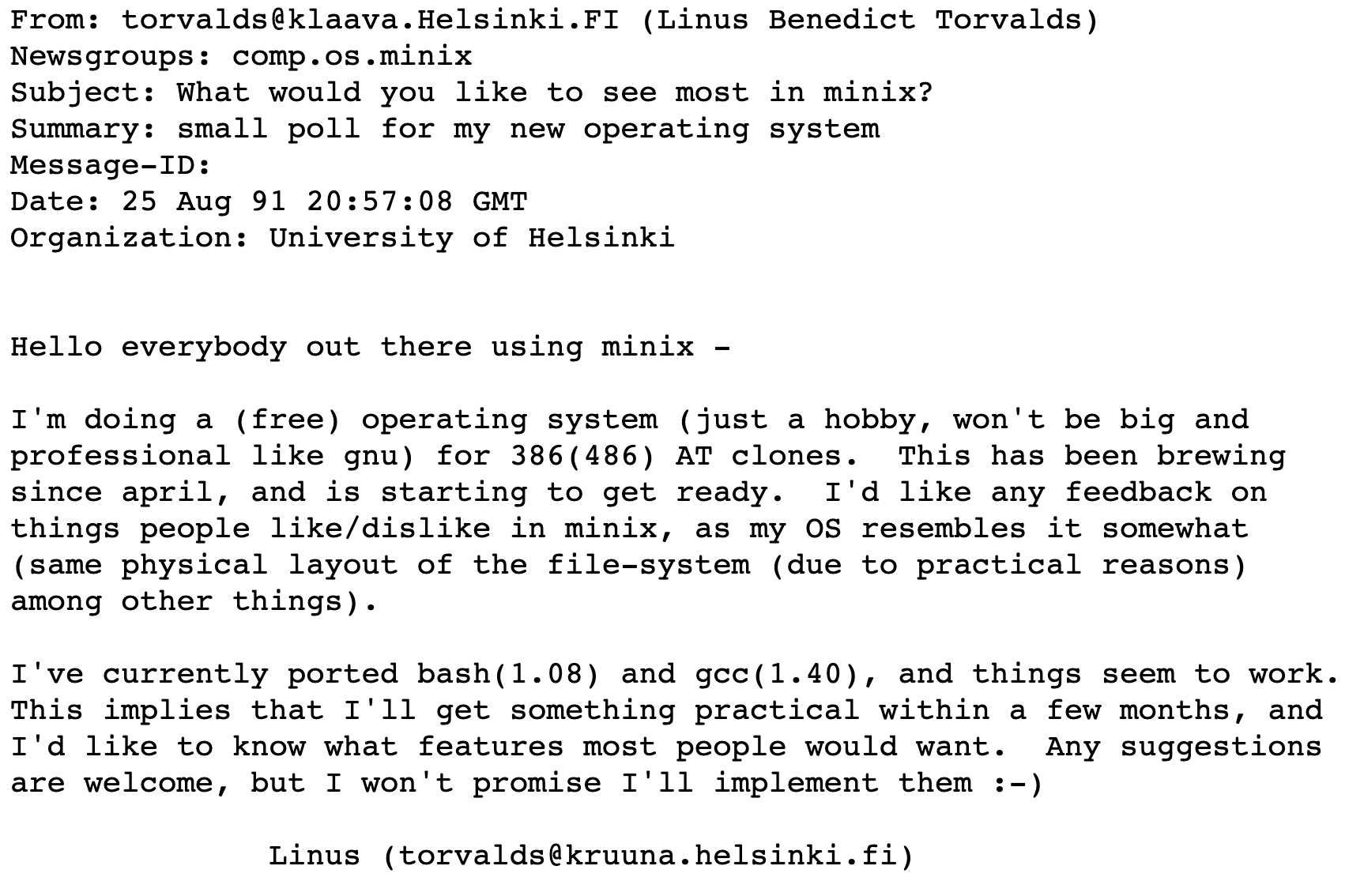

The Open Source Era

Data Everywhere

- plenty of data to was available for studies like the 10x study,

- challenge for researchers was (and is) getting access to data that is cheap to acquire (i.e. licensing) and still representative of the problems in practice.

- Paper by Tu and Godfrey (readings) represents one of these early approaches.

Empirical Software Engineering

Novel in the 90s to even be able to get source code!

Triggered a focus on Empirical SE, like Kitchenham outlines.

Now there was enough data to be able to systematize what we were studying and how we were studying it.

One issue that remained, of course, was to be able to move between industry relevance and academic tractability.

Bringing Insights into Practice: The Sapienz Example

From the SCAM Facebook paper by O’Hearn and Harman:

- Irrelevant: ‘Academics need to make their research more industrially-relevant’,

- Unconvincing: ‘Scientific work should be evaluated on large-scale real-world problems’,

- Misdirected: ‘Researchers need to spend more time understanding the problems faced by the industry’,

- Unsupportive: ‘Industry needs to provide more funding for research’,

- Closed System: ‘Industrialists need to make engineering production code and software engineering artifacts available for academic study’, and

- Closed Mind: ‘Industrialists are unwilling to adopt promising research’.

Insights into the human aspect

Developers are, in practice, not short of lists of fault and failure reports from which they might choose to act

Thus the tool - the data science output—must ensure it produces work that has:

- Relevance: the developer to whom the bug report is sent is one of the set of suitable people to fix the bug;

- Context: the bug can be understood efficiently;

- Timeliness: the information arrives in time to allow an effective bug fix.

- Debug payload: the information provided by the tool makes the fix process efficient;

Summary

- people, and managers, have a need to understand what is actually happening

- software metrics are one (limited) tool for this

- there is more than just metrics - need to consider the wider perspectives

- enormous amounts of data (of varying quality) available

Neil Ernst ©️ 2024-5